During the past 7 business days I took part in a training about SIMATIC IT XHQ 4.0 software.

I participated in the Basic (21-22 Jan) and Advanced (25-29 Jan) training.

This training was given by the engineer Fabio Terasaka who’s a team lead at Chemtech having over 3 years of experience using and deploying XHQ in Brazil and internationally.

I decided to write this post so that people can get an overview of XHQ from a consultant/developer perspective.

I’m also excited about the endless possibilities XHQ has to offer when it comes to optimizing and applying intelligence to an enterprise.

What is XHQ?

For those who don’t know or who have never heard of XHQ, here goes a succinct description of it extracted from its official site:

SIMATIC IT XHQ Operations Intelligence product line aggregates, relates and presents operational and business data in real-time to improve enterprise performance. Through SIMATIC IT XHQ, you have a single coherent view of information, enabling a variety of solutions in real-time performance management and decision support. [1]

XQH extracts data from a variety of systems - as the production (PIMS), laboratory (LIMS) and plant-floor systems. XHQ unifies all the operational and management data in a single view, in real time, allowing you to take a snapshot, minute to minute or second to second, of all the enterprise.

XHQ can be integrated in the intranet or a website for operations management, integrating production data such as the use of raw material and equipment, stocks, as well as data related to the product (temperature, pressure, electrical current), quality and maintenance.

XHQ implements the concepts of Operational Dashboard and Management [2] by Key Performance Indicators (KPIs) [3].

XHQ is used in energy, petrochemical, and manufacturing industries to aggregate, draw relationships, and then graphically depict business and operational data.

XHQ Timeline

XHQ was created in 1996 by an American company called IndX Software Corp based on Aliso Viejo, California, USA.

In December 2003 Siemens expands its IT portfolio acquiring IndX [4].

In December 2009 Chemtech - A Siemens Company absorbs the company responsible for XHQ around the world [5].

XHQ Architecture

XHQ has a modular architecture as can be seen in the following picture:

Back-end Operational Systems

Comprised of databases and its respective connectors that give access to business real-time data: times series data (PHD, PI, OPC), real-time point data (Tags), relational databases (Oracle, MS-SQL), enterprise applications (SAP), etc.

Middle Tier

Comprised of XHQ set of servers. Each XHQ server plays a role in the system:

XHQ Enterprise Server manages the end-users views of data that are created by XHQ developers.

XHQ Solution Server has the Real-time Data Cache and the Relational Data Cache that removes the burden associated with backend data retrieval.

XHQ Alert Notification Server (XANS) is a subsystem of XHQ responsible for alerting end-users about any inconsistence existent in the system.

3rd Party Web Servers as IIS and Tomcat give end-users access to data processed by XHQ.

User Interface

Users can access XHQ processed data (Views) using PDAs, web browsers, etc.

Users also have access to View Statistics that is a kind of Google Analytics. It shows default reports about peak and average user count, user and view hits by month, user and view hits by week, view usage by user per day, view usage per day, etc. You can create your own analytics reports using custom SQL.

Starting with XHQ 4.0 there’s a separate application called Visual Composer that enables developers to create dynamic, high customizable data views. Visual Composer can use XHQ data collections as its data source.

Visual Composer focus in graphics/charts and tables/grids to show business strategic content.

XHQ behind the Curtains

XHQ does its job using a subscription model based on the client-server architecture. Clients are automatically notified of changes that occur on the monitored variables. For example, if a user uses a view that has 2 plant variables and their pool period (configured in the connector or on the variable itself) is set with 2 seconds, the user screen will automatically refresh (using Ajax) to show the new variable values at each 2 seconds. This is the so called real-time process management.

XHQ core is implemented in Java and uses a Java Applet that is loaded in the browser to present the data to the user.

XHQ makes extensive use of JavaScript to inject customization points into the software.

Servers configuration are kept in .properties files making it easy to edit.

Data presented to the user comes from “Collections” that use high performance data caches that are XHQ own local databases. You can use live data from the backend but it’s not advisable because of the overhead implied. The performance gains can be better verified when lots of users are using the same view.

Skills demanded by XHQ

To get XHQ up and running you’ll need the following skills:

SQL query skills. SQL is used all the time to retrieve the right data from the back-ends.

XML and XSLT skills. Both necessary to configure data points (Tags) in the system and to export data.

Previous software development skills using the .NET Framework or Java are important to develop extension points to XHQ.

JavaScript skills. Used to define custom system variables and client configuration.

HTML and CSS skills. Used to customize the user UI.

Web server administration using IIS and Tomcat is a plus when it comes to deploying the solution in the customer.

Computer network skills. Used to detected any problem between clients and servers.

Solid debugging skills related to the above mentioned technologies. If something goes wrong, you’ll need to check a lot of log files (there is one for each agent in the system).

XHQ Implementation

XHQ consultants/engineers are the guys responsible for studying the necessities of the customer interested in optimizing the enterprise.

The following are 10 basic steps used when XHQ is implemented as the choice for business optimization:

01 - XHQ is installed on client premises;

02 - Groups of users and use cases are defined;

03 - Connectors are created to access data sources scattered all over the enterprise;

04 - A solution model is defined;

05 - A navigation model is defined;

06 - Views of data for different audiences and activities are built;

07 - System components and collections of data are linked for data retrieval;

08 - The solution is updated, tested and optimized;

09 - Steps 1 through 7 are iterated;

10 - Security is applied in the solution model through the use of roles/user groups.

XHQ Value as a RtPM Tool

XHQ aggregates value to your business as a RtPM (Real-time Process Management) tool:

Using XHQ, operational costs may decrease an average of 8% each year, while the production of high value products may increase 10.5%. This is because XHQ helps the management board in the decision taking process. [6]

The following are 10 basic reasons why XHQ aggregates value to the business:

01 - Directors and staff can take their decisions based on the same information;

02 - Response times are dramatically reduced;

03 - Information availability is made true from one area to another (and vice-versa);

04 - Interfaces between one area and another, usually managed by different teams and systems, can be closely monitored;

05 - User-friendly and self-explained process schematics simplify plan management;

06 - Reduced load on mission-critical systems: read only users can use only XHQ;

07 - Leverage of other investments: PIMS systems utilization is increased, and become mission-critical as well;

08 - Intangible gain: re-think strategies for company needs in terms of what information is

considered critical for business decisions;

09 - Integration with enterprise applications as SAP R/3, logistics is greatly improved by

watching supply and distribution movements;

10 - Transport can come and go (monitored) graphically.

XHQ Customers

XHQ is used throughout the world.

The following are some of the customers already using XHQ to optimize their business:

CSN, ExxonMobil, Chevron, Dow Chemical, Saudi Aramco among others.

Interested in optimizing your business?

If you’re looking for business optimization/intelligence, you can get in contact with Chemtech for more information.

References

[1] SIMATIC IT XHQ official site

[2] Business Performance Management

[4] Siemens expands its IT portfolio in process industries (PDF file)

[5] Chemtech absorbs the company responsible for XHQ around the world

[6] Siemens of Brazil Press Information (in Portuguese)

[7] XHQ for Steel Mills Real Time Performance Management (PDF file)

[8] XHQ can gather information from the whole oil & gas production chain

[9] Chemtech enters into the IndX’s biggest XHQ project in Brazil

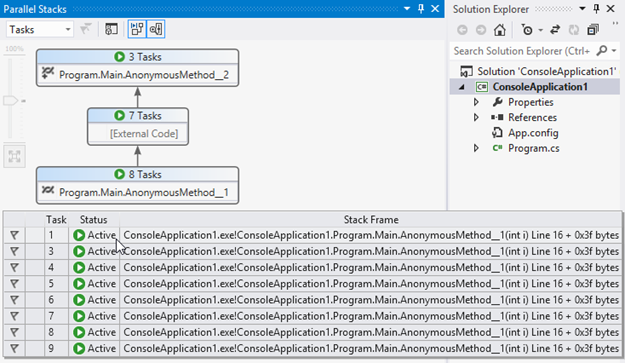

Figure 3 - The Parallel Stacks window in Visual Studio 2012 ( #8 threads )

Figure 3 - The Parallel Stacks window in Visual Studio 2012 ( #8 threads )